A mother steps on the brakes, bringing her car to a stop as she drops her kids off for dance lessons. At the time, she doesn’t notice anything wrong, but when she takes her car in for its regular service appointment, the mechanic conducts a diagnostic check and discovers that the primary brake system on the car had failed because of a faulty braking controller without anyone realizing it. Fortunately, the car was able to stop successfully due to the vehicle’s system redundancies, and the dealer’s diagnostic test confirms that since that first chip failure, another one has not occurred. The braking systems are behaving normally.

Following that, the dealership sends the information about the braking failure to the manufacturer, where an analyst notes that over the last 60 days, and around the country, six other brake failures traced back to the same controller system have been reported for the same make and model. In each of these situations, the backup system successfully brought each car to a complete stop. And, as in the case with the mother who dropped her kids off at dance class, the analyst looks at the reporting samples for these six other failures and determines that each is isolated and non-recurring.

For high-performance computing, artificial intelligence, and data centers, the path ahead is certain, but with it comes a change in substrate format and processing requirements. Instead of relying on the quest for the next technology node to bring about future device performance gains, manufacturers are charting a future based increasingly on heterogeneous integration.

But while heterogeneous integration promises more functionality, faster data transfer, and lower power consumption, these chiplet combinations, with different functionalities and nodes, will require increasingly larger packages, with sizes at 75mm x 75mm, 150mm x 150mm, or even larger.

To further complicate matters, these packages will also feature elevated numbers of redistribution layers, in some cases as high as 24 layers. And with each of those layers, the threat of a single killer defect, which would effectively ruin an entire package, increases. As such, the ability to maintain high yields becomes increasingly difficult.

The More than Moore era is upon us, as manufacturers increasingly turn to back-end advances to meet the next-generation device performance gains of today and tomorrow. In the advanced packaging space, heterogeneous integration is one tool helping accomplish these gains by combining multiple silicon nodes and designs inside one package.

But as with any technology, heterogeneous integration, and the fan-out panel-level packaging that often enables it, comes with its own set of unique challenges. For starters, package sizes are expected to grow significantly due to the number of components making up each integrated package. The problem: these significantly bigger packages require multiple exposure shots to complete the lithography steps for the package. Adding to this, multiple redistribution layers (RDL) may cause stress to both the surface and inside of the substrate, resulting in warpage. And then there is the matter of tightening resolution requirements and more stringent overlay needs.

Heterogeneous integration enables multiple chips from varying Silicon processes to deliver superior performance. In large panel packages, present day limits on exposure field size forces manufacturers to ‘stitch’ together multiple reticles, which slows throughput and increases costs. Onto Innovation’s new JetStep® X500 system dramatically increases the exposure field up to 250 x 250 mm, slashing the number of exposures needed and cutting costs in FOPLP applications.

HIGH-PERFORMANCE compute, 5G, smartphones, data centers, automotive, artificial intelligence (AI) and the Internet of Things (IoT) – all rely on heterogeneous integration to achieve next-level performance gains. By combining multiple silicon nodes and designs inside one package, ranging in size from 75mm x 75mm to 150mm x 150mm, heterogeneous integration is one factor bringing us closer toward an era in which technology is beneficially embed into nearly all aspects of our lives whether it’s in the smart factories where we work, the self-driving cars that navigate the cities in which we live, the mobile devices that connect us to each other and the wearable devices that help us live healthier lives.

Regardless of the speed to which we are approaching this promising new era, this transition comes with increasing challenges, ones that are constrained by increasingly stringent requirements. The next-generation of heterogeneous integration technologies, and the fan-out, panel-level packaging that often accompanies it, will demand even tighter overlay requirements to accommodate larger package sizes with fine-pitch chip interconnects on large-format, 510mm x 515mm flexible panels.

Over the past ten years, primarily driven by a tremendous expansion in the availability of data and computing power, artificial intelligence (AI) and machine-learning (ML) technologies have found their way into many different areas and have changed our way of life and our ability to solve problems. Today, artificial intelligence and machine learning are being used to refine online search results, facilitate online shopping, customize advertising, tailor online news feeds and guide self-driving cars. The future that so many have dreamed of is just over the horizon, if not happening right now.

The term artificial intelligence was first introduced in the 1950s and used famously by Alan Turing. The noted mathematician and the creator of the so-called Turing Test believed that one day machines would be able to imitate human beings by doing intelligent things, whether those intelligent things meant playing chess or having a conversation. Machine learning is a subset of AI. Machine learning allows for the automation of learning based on an evaluation of past results against specified criteria. Deep learning (DL) is a subset of machine learning (FIGURE 1). With deep learning, a multi-layered learning hierarchy in which the output of each layer serves as the input for the next layer is employed.

Currently, the semiconductor manufacturing industry uses artificial intelligence and machine learning to take data and autonomously learn from that data. With the additional data, AI and ML can be used to quickly discover patterns and determine correlations in various applications, most notably those applications involving metrology and inspection, whether in the front-end of the manufacturing process or in the back-end. These applications may include AI-based spatial pattern recognition (SPR) systems for inline wafer monitoring [2], automatic defect classification (ADC) systems with machine-learning models and machine learning-based optical critical dimension (OCD) metrology systems [1][7].

When the subject of hybrid bonding is brought up in the industry, the focus is often on how this technique is used to manufacture CMOS image sensors (CIS), an essential device for today’s digital cameras, particularly those found in smartphones. As such, CIS is a common touchpoint given the ubiquity of mobile phones, whether you hold a product from Apple, Samsung or Huawei in your hands.

But while today’s CIS devices currently dominate the use of hybrid bonding, high-performance computing (HPC) is emerging as a new high-growth application for hybrid bonding. This is a result of the trend toward finer pitched interconnects in advanced 3D packaged memory technologies. In addition, the market share of high-end performance packaging, including both 2.5D and 3D packaging, is expected to be $7.87B by 2027, with a compound annual growth rate (CAGR) of 19% from 2021 to 2027, according to Yole Développement. As for 3D stacked packaging alone, it is expected to grow at a CAGR of 58% to 70% during the same period.

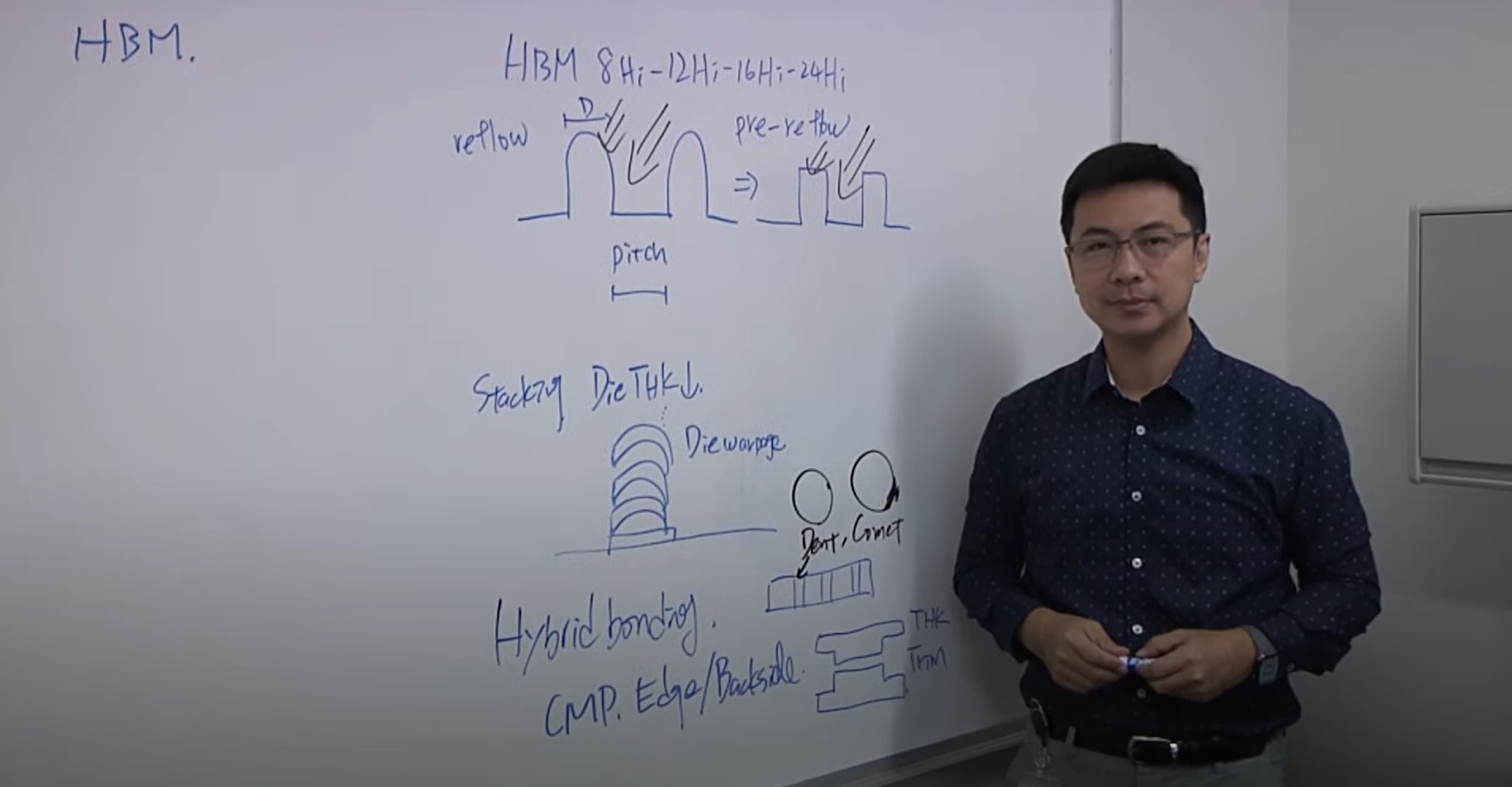

Using direct Cu-to-Cu connections instead of bumps and suitable for pitches less than 10μm, hybrid bonding often involves the direct stacking of two wafers, with the space between the two planarized surfaces approaching zero. Hybrid bonding has advantages over conventional micro-bumping, such as enabling smaller dimension I/O terminals and reducing pitch interconnects. But while both hybrid bonding and conventional micro-bumping support higher-density interconnect schemes, hybrid bonding is an expensive process compared to bumping and requires much tighter process control, especially in the areas of defect inspection, planarity measurement and void detection.

While wafer-to-wafer bonding has already been demonstrated for NAND devices and is currently used in CIS manufacturing for the integration of the imager layer and logic, DRAM manufacturers are also looking to adapt hybrid bonding to replace bumps. Utilizing a hybrid bonding interconnect scheme capable of reducing the overall package thickness by tens and possibly hundreds of microns in certain situations, HBM (high-bandwidth memory) die are vertically stacked in 4,8,12,16 die stacks. The gap between each die is about 30μm when bumps are used, but the gap is nearly zero with hybrid bonding.